A version of this post originally appeared on Tedium, a twice-weekly newsletter that hunts for the end of the long tail.

Here’s a small piece of news you may have missed while you were trying to rebuild your entire life to fit inside your tiny apartment at the beginning of the COVID crisis: Because of the way that the virus shook up just about everything, Google skipped the release of Chrome version 82.

Videos by VICE

Who cares, you think? Well, users of FTP, or the File Transfer Protocol.

During the pandemic, Google delayed its plan to kill FTP, and now that things have settled to some degree, Google recently announced that it is going back for the kill with Chrome version 86, which deprecates the support once again, and will kill it for good in Chrome 88.

(Mozilla announced similar plans for Firefox, citing security reasons and the age of the underlying code.)

It is one of the oldest protocols the mainstream internet supports—it turns 50 next year—but those mainstream applications are about to leave it behind.

Let’s ponder the history of FTP, the networking protocol that has held on longer than pretty much any other.

1971

The year that Abhay Bhushan, a masters student at MIT who was born in India, first developed the File Transfer Protocol. Coming two years after telnet, FTP was one of the first examples of a working application suite built for what was then known as ARPANET, predating email, Usenet, and even the TCP/IP stack. Like telnet, FTP still has a few uses, but has lost prominence on the modern internet largely because of security concerns, with encrypted alternatives taking its place—in the case of FTP, SFTP, a file transfer protocol that operates over the Secure Shell protocol (SSH), the protocol that has largely replaced telnet.

FTP is so old it predates email—and at the beginning, actually played the role of an email client

Of the many application-level programs built for the early ARPANET, it perhaps isn’t surprising that FTP is the one that stood above them all to find a path to the modern day.

The reason for that comes down to its basic functionality. It’s essentially a utility that facilitates data transfer between hosts, but the secret to its success is that it flattened the ground to a degree between these hosts. As Bhushan describes In his requests for comment paper, the biggest challenge of using telnet at the time was that every host was a little different.

“Differences in terminal characteristics are handled by host system programs, in accordance with standard protocols,” he explained, citing both telnet and the remote job entry protocol of the era. “You, however, have to know the different conventions of remote systems, in order to use them.”

The FTP protocol he came up with tried to get around the challenges of directly plugging into the server by using an approach he called “indirect usage,” which allowed for the transfer or execution of programs remotely. Bhushan’s “first cut” at a protocol, still in use in a descendant form decades later, used the directory structure to suss out the differences between individual systems.

In a passage from the RFC, Bhushan wrote:

I tried to present a user-level protocol that will permit users and using programs to make indirect use of remote host computers. The protocol facilitates not only file system operations but also program execution in remote hosts. This is achieved by defining requests which are handled by cooperating processes. The transaction sequence orientation provides greater assurance and would facilitate error control. The notion of data types is introduced to facilitate the interpretation, reconfiguration and storage of simple and limited forms of data at individual host sites. The protocol is readily extendible.

In an interview with the podcast Mapping the Journey, Bhushan noted that he came to develop the protocol because of a perceived need for applications for the budding ARPANET system, including the need for email and FTP. These early applications became the fundamental building blocks of the modern internet and have been greatly improved on in the decades since.

Due to the limited capabilities of computing at the time, Bhushan noted that early on, email-style functionality was actually a part of FTP, allowing for messages and files to be distributed through the protocol in a more lightweight format—and for four years, FTP was technically email of sorts.

“So we said, ‘Why don’t you put two commands into FTP called mail and mail file?’ So mail is like normal text messages, mail file is mailing attachments, what you have today,” he said in the interview.

Of course, Bhushan was not the only person to put his fingerprints on this fundamental early protocol, eventually moving outside of academia with a role at Xerox. The protocol he created continued to grow without him, receiving a series of updates in RFCs throughout the 1970s and 1980s, including an implementation that allowed it to support the TCP/IP specification around 1980.

While there have been some modest updates since to keep with the times and add support for newer technologies, the version of the protocol we use today came about in 1985, when Jon Postel and Joyce K. Reynolds developed RFC 959, an update of the prior protocols that is the basis for current FTP software. (Postel and Reynolds, among others, also worked on the domain-name system around this time.) While described in the document as “intended to correct some minor documentation errors, to improve the explanation of some protocol features, and to add some new optional commands,” it nonetheless is the version that stuck.

Given its age, FTP has many inherent weaknesses, many of which manifest themselves to this day. For example, transferring a file folder with a lot of tiny files is intensely inefficient with FTP, which does much better with large files as it limits the number of individual connections that are needed.

In many ways, because FTP was so early in the history of the internet, it came to define the shape of the many protocols that came after. A good way to think about it is to compare it to something that frequently improves by leaps and bounds over a few decades—say, basketball sneakers. Certainly, Converse All-Stars are good shoes and work well in the right setting even today, but for heavy-duty basketball players, something from Nike, potentially with the Air Jordan brand attached, is far more likely to find success.

The File Transfer Protocol is the Converse All-Star of the internet. It was file transfer before file transfer was cool, and it still carries some of that vibe.

“Nobody was making any money off the internet. If anything, it was a huge sink. We were fighting the good fight. We knew there was potential. But anybody who tells you they knew what would happen, they’re lying. Because I was there.”

— Alan Emtage, the creator of Archie, considered the internet’s first search engine, discussing with the Internet Hall of Fame why his invention, which allowed users to search anonymous FTP servers for files, didn’t end up making him rich. Long story short, the internet was noncommercial at the time, and Emtage, a graduate student and technical support staffer at Montreal‘s McGill University, was leveraging the school’s network to run Archie—without their permission. “But it was a great way of doing it,” he told the site. “As the old saying goes, it’s much easier to ask for forgiveness than to ask for permission.” (Of note: Like Bhushan, Emtage is an immigrant; he was born and raised in Barbados and came to Canada as an honors student.)

Why FTP may be the last link to a certain kind of past that’s still online

As I wrote a few years ago, if you grab an old book about the internet and try to pull up some of the old links, the best chances you have of actually getting a hold of the software featured is through a large corporate FTP site, as these kinds of sites tend not to go offline very often.

Major technology companies, such as Hewlett-Packard, Mozilla, Intel, and Logitech, used these sites for decades to distribute documentation and drivers to end users. And for the most part, these sites are still online, and have content that has just sat there for years.

In many cases, the ways that these sites are most useful are when you need access to something really old, like a driver or documentation. (When I was trying to get my Connectix QuickCam working, I know it came in handy.)

In some ways, this setting can be less nerve-racking than trying to navigate a website, because the interface is consistent and works properly. (Many web interfaces can be pretty nightmarish to dig through when all you want is a driver.) But that cuts both ways—the simplicity also means that FTP often doesn’t handle modern standards quite so well, and can be far more pokey than modern file-transfer methods.

As I wrote in for Motherboard last year, these FTP sites (while being archived in different places) are growing increasingly hard to reach, as companies move away from this model or make the decision to take the old sites offline.

As I explained in the piece, which features an interview from Jason Scott of the Internet Archive, the archive is taking steps to protect these vintage public FTP sites, which at this point could go down at any time.

Scott noted at the time that the long-term existence of these FTP sites was really more of an exception than the rule.

“It was just this weird experience that FTP sites, especially, could have an inertia of 15 to 20 years now, where they could be running all this time, untouched,” he said.

With one of the primary use cases of FTP sites hitting the history books once and for all, it may only be a matter of time before they’re gone for good. I recommend, before that happens, diving into one sometime and just seeing the weird stuff that’s there. We don’t live in a world where you can just look at entire file folders of public companies like this anymore, and it’s a fascinating experience even at this late juncture.

“A technology that was ahead of its usage curve, FTP is now attracting a critical mass of business users who are finding transfer by email grossly inefficient or impractical when dealing with large documents.”

— A passage from a 1997 story in Network World that makes the case that FTP, despite its creakiness, it was still a good choice for many telecommuters and corporate internet users. While written by a ringer—Roger Greene was the president of Ipswitch, a major FTP program developer of the era—his points were nonetheless fitting for the time. It was a great way to transmit large files across networks and store them on a server somewhere. The problem is that FTP, while it improved over time, would be eventually outclassed by far more sophisticated replacements, both protocols (BitTorrent, SFTP, rsync, git, even modern variants of HTTP) and cloud computing solutions such as Dropbox or Amazon Web Services.

Back in the day, I once ran an FTP server. It was mostly to share music during my college days, when people who went to college were obsessed with sharing music. We had extremely fast connections, and as a result, it was the perfect speed to run an FTP server.

It was a great way to share a certain musical taste with the world, but the university system eventually got wise to the file-sharing and started capping bandwidth, so that was that … or so I thought. See, I worked in the dorms during the summer, and it turned out that after people left school, the cap was no longer a problem, and I was able to restart the FTP server once again for a couple of months.

Eventually, I moved out and the FTP server went down for good—and more efficient replacements emerged anyway, like BitTorrent, and more legal ones, like Spotify and Tidal. (Do I have regrets about running this server now? Sure. But at the time, I felt like I was sticking it to the man somehow. Which, let’s be honest, I wasn’t.)

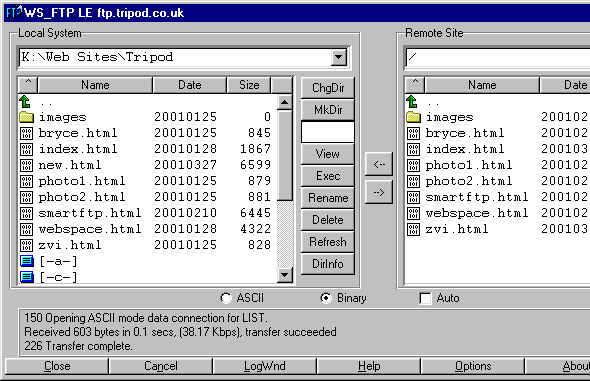

Just as file-sharing has largely evolved away from those heady times more than 15 years ago, so too have we evolved from the FTP servers of yore. We have largely learned more effective, more secure techniques for remote file management in the years since. In 2004, it was widely considered best practice to manage a web server using FTP. Today, with tools like Git making efficient version control possible, it’s seen as risky and inefficient.

Now, even as major browsers get rid of FTP support in the coming months, it’s not like we’re totally going to be adrift of options. Specialized software will, of course, remain available. But more importantly, we’ve replaced the vintage FTP protocol for the right reasons.

Unlike in cases like IRC (where the protocol lost popular momentum to commercial tools) and Gopher (where a sudden shift to a commercial model stopped its growth dead in its tracks), FTP is getting retired from web browsers because its age underlines its lack of security infrastructure.

Some of its more prominent use cases, like publicly accessible anonymous FTP servers, have essentially fallen out of vogue. But its primary use case has ultimately been replaced with more secure, more modern versions of the same thing, such as SFTP.

And I’m sure some person in some suitably technical job somewhere is going to claim that FTP will never die because there will always be a specialized use case for it somewhere. Sure, whatever. But for the vast majority of people, when Chrome disconnects FTP from the browser, they likely won’t find a reason to reconnect.

If FTP’s departure from the web browser speeds up its final demise, so be it. But for 50 years, in one shape or another, it has served us well.