Twitter launched its version of Instagram Stories this week, promising that all bad takes and unflattering pictures posted to the Fleets feature would automatically disappear after a day.

But VICE News has learned that Twitter doesn’t automatically delete users’ ephemeral posts after 24 hours as it publicly claims. Instead it stores them for at least 30 days because it is worried the feature will be used to spread disinformation and extremist content.

Videos by VICE

On Tuesday, at the exact same time as the company’s CEO Jack Dorsey was being grilled on Capitol Hill about election disinformation, Twitter released its new feature, saying, “Fleets are for sharing momentary thoughts — they help start conversations and only stick around for 24 hours.”

Essentially, Fleets are an almost identical copy of Instagram’s Stories, which in turn was an even more brazen rip-off of Snapchat’s ephemeral messaging feature, also called Stories. In Fleets, you can post pictures and videos, write text, and react to regular tweets.

But the ephemeral nature of the posts also poses a major problem for the company: It will allow people to spread disinformation or extremist content more discreetly and out of the view of the regular Twitter feed.

Marc-André Argentino, an extremism researcher currently studying for a PhD at Concordia University, decided the best way to test the potential for Fleets to spread disinformation and extremist content was to try it out himself.

Argentino found he was able to post a wide variety of banned material linked to conspiracy groups like QAnon and militia groups like the Proud Boys. While Fleets doesn’t allow users to post direct links, they can post messages with the addresses of websites, which can be easily converted into real links.

Argentino also found that when you tag another user in a fleet it starts a messaging thread with that user and even allows users to tag people who have blocked them — an issue the company says it’s trying to fix.

“This could be a threat vector when it comes to cyberbullying, harassment, stalking, violent threats, and mobbing with limited recourse for reporting,” Argentino said. “It’s a way to isolate and target an individual.”

Because fleets do not appear in the regular feed of tweets, they will make it much harder for researchers to track the spread of disinformation and extremist content, Argentino said, arguing that Twitter should have reached out to experts like him before they launched the feature.

“We would happily red team this shit for you because features like this make our lives a living hell,” Argentino said.

Hours after VICE News asked Twitter about the concerns raised by Argentino’s posts, his account was temporarily suspended.

“We are always listening to feedback and working to improve Twitter to make sure it’s safe for people to contribute to the public conversation,” Twitter spokesman Trenton Kennedy told VICE News.

But the company also revealed that despite what it said in its public announcement, fleets are not automatically deleted after 24 hours, as part of its effort to track issues with posts that have been reported as problematic.

“Depending on the timing of the report and the short lifespan of a Fleet, a reported Fleet may expire before we can take action,” Kennedy said. “We do, however, keep copies of Fleets for a limited time even after they expire for purposes of reviewing them for violations.”

Twitter said that fleets are stored for up to 30 days, which can be extended for posts that break Twitter’s rules, in order to give the company time to conduct a proper review and take enforcement action.

During that period, users can access their old fleets in the Your Twitter Data section of the website or app.

Instagram and Snapchat both retain metadata related to their ephemeral messaging features which can be subpoenaed by governments, and while users can retain a copy of the content of their disappearing messages, the companies themselves don’t do that.

Here’s what else is happening in the world of election disinformation.

Trump continues his embrace of QAnon

On Wednesday night, President Donald Trump took his embrace of QAnon one step further, by posting a link to a video featuring the man who ran the website where Q posted all his messages.

Ron Watkins was the administrator of 8kun, a fringe message board previously known as 8chan, before he claimed to resign on Election Day — though some experts believe he is still involved in 8kun.

He is the son of Jim Watkins, the owner of 8Kun and the person some experts believe is in fact Q, or who at the very least orchestrates who can post as Q.

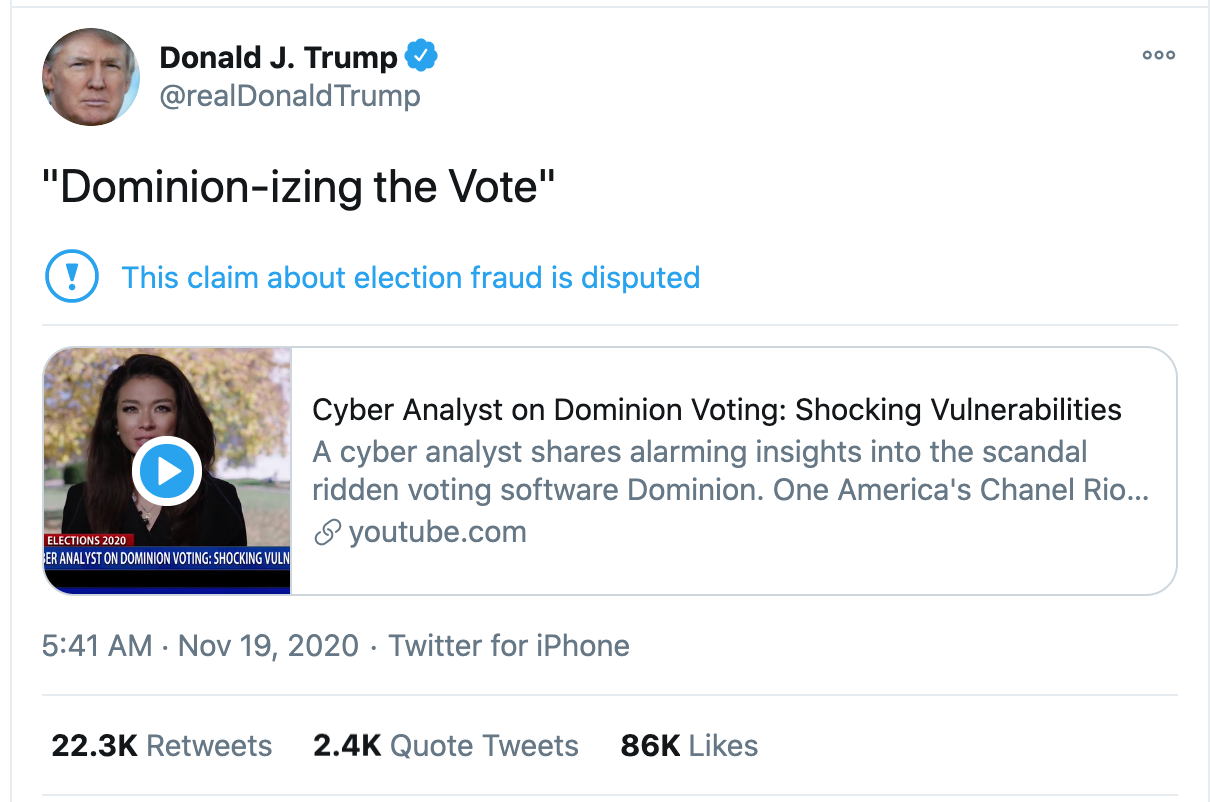

Since claiming to leave his post at 8kun, Ron Watkins has emerged as one of the main boosters of the Dominion Voting System conspiracy theory, which claims without evidence that the company’s voting machines changed Trump votes to Biden votes.

The video Trump tweeted features Watkins speaking on the far-right, pro-Trump One America News Network and calling himself a “large system technical analyst.” The YouTube video, which doesn’t have a label flagging misinformation, has been viewed 882,000 times already.

Lots of people are watching YouTube videos supporting claims of election fraud

YouTube says that more people are watching videos that dispute election fraud than are watching videos that support the bogus claims, pointing out that its recommendation algorithm is working to boost the former while limiting the reach of the latter.

And according to a new report from Transparency Tube, an independent research project that has been studying misinformation trends on YouTube, that is true. But what is also true is that the number of people watching videos claiming election fraud is real is still huge.

According to the report, 138 million people watched videos supporting claims of election fraud in the week from Nov. 3 to Nov. 10. They reached these videos not through the recommended section, but via search, channel subscriptions, and direct links.

Parler users are struggling to sign up because of “special character” password requirements

Parler, the right-wing social network that is backed by the Mercer family—of Cambridge Analytica fame—has seen a massive surge in users in the last two weeks as conservatives annoyed that Twitter and Facebook and calling out Donald Trump’s lies look for a place where they can openly spread conspiracy theories in peace.

But a number of those looking to sign up are running into problems at the first hurdle. According to the reviews of Parler’s app, many of those seeking to sign up cannot get their heads around the requirement to include a “special character” in their password.

And that’s not the only problem. On Google’s app store, the Parler app has a sizeable number of one-star reviews with users complaining about not being able to open an account, being unable to get verified, and seeing their battery drain dramatically.

“Terrible. Worst social media app by far, way too many glitches. How could a company put out an app that can’t even run?” one disappointed user said.