In June, an employee at a tech company received a strange voicemail from a person that identified himself as the CEO, asking for “immediate assistance to finalize an urgent business deal.” As it turns out, despite sounding almost like the CEO, the voicemail was actually created with computer software. It was an audio deepfake, according to a security company that investigated the incident.

NISOS, a security consulting company based in Alexandria, Virginia, analyzed the voicemail and determined that it was fake, a synthetic audio designed to trick the receiver, the company told Motherboard. On Thursday, NISOS published a report on the incident, which it shared with Motherboard in advance.

Videos by VICE

For the last three years, journalists and researchers have spent a lot of time worrying about the possibility of deepfake videos—forged clips where faces are swapped for those of celebrities or politicians using artificial intelligence—influencing elections or causing a major international incident. In reality, that hasn’t happened, and deepfakes have mostly been used to create non-consensual porn videos.

Deepfake audio has been demonstrated in some flashy tech demos. But the technology is also beginning to be used in the criminal world. Last year, a company revealed that an employee had made a transfer of $240,000 after receiving a call from someone who appeared to be their CEO. The call was actually a deepfake, according to the company’s insurer.

NISOS would not disclose the name of the company involved in this particular case, but agreed to share the audio.

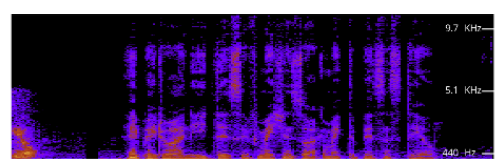

The employee who received the voicemail, however, did not fall for it and flagged it to the company, which called in NISOS to investigate. NISOS researchers analyzed the audio with a spectrogram tool called Spectrum3d in an attempt to detect any anomalies.

“You could tell there was something wrong about the audio,” NISOS researcher Dev Badlu told Motherboard in a phone call. “It looks like they basically took every single word, chopped it up, and then pasted them back in together.”

Badlu said he could tell it was fake because there were a lot of stark peaks and valleys within the audio, which is not normal in regular speech. Moreover, he added, when he lowered the volume of the alleged CEO, the background was “dead silent,” there was no background noise whatsoever, which was a clear sign of a forgery.

Rob Volkert, another researcher at NISOS, said they think the criminals were trying the technology out to see if the targets would give them a call back. In other words, he said, this was just step one of a presumably more complex operation that was relatively close to being successful.

“It definitely sounds human. They checked that box as far as: does it sound more robotic or more human? I would say more human,” Volkert said. “But it doesn’t sound like the CEO enough.”

Have you ever received or heard an attempt to use a deepfake audio to defraud a company? If you have any tips about the use of deepfake technology, using a non-work phone or computer, you can contact Lorenzo Franceschi-Bicchierai securely on Signal at +1 917 257 1382, OTR chat at lorenzofb@jabber.ccc.de, or email lorenzofb@vice.com

Even though this deepfake audio got caught, Volkert, Badlu and their colleagues believe this is a sign that criminals are starting to experiment with the technology, and we may see more attempts like this one.

“The ability to generate synthetic audio extends an e-criminal’s toolkit and the criminal at the end of the day still has to effectively use social engineering tactics to induce someone into taking an action.” NISOS wrote in its report. “Criminals and potentially broader nation state actors also learn from each other, so as these high-profile cases gain more notoriety and success, we anticipate more illicit actors trying them and learning from others who have paved the way.”

Subscribe to our new cybersecurity podcast, CYBER.