It has become a cliche to declare that the future is full of both “great promise and great peril.” Nonetheless, this aphorism expresses an important fact about the Janus-faced nature of our increasingly powerful technologies. If humanity realizes the best possible future, we could quite possibly usher in an era of unprecedented human flourishing, happiness, and value. But if the great experiment of civilization fails, our species could meet the same fate as the dinosaurs.

I find it helpful to think about what a child born today could plausibly expect to witness in her or his lifetime. Since the rate of technological change appears to be unfolding according to Ray Kurzweil’s Law of Accelerating Returns, this imaginative activity can actually yield some fascinating insights about our evolving human condition, which may soon become a posthuman condition as “person-engineering technologies” turn us into increasingly artificial cyborgs.

Videos by VICE

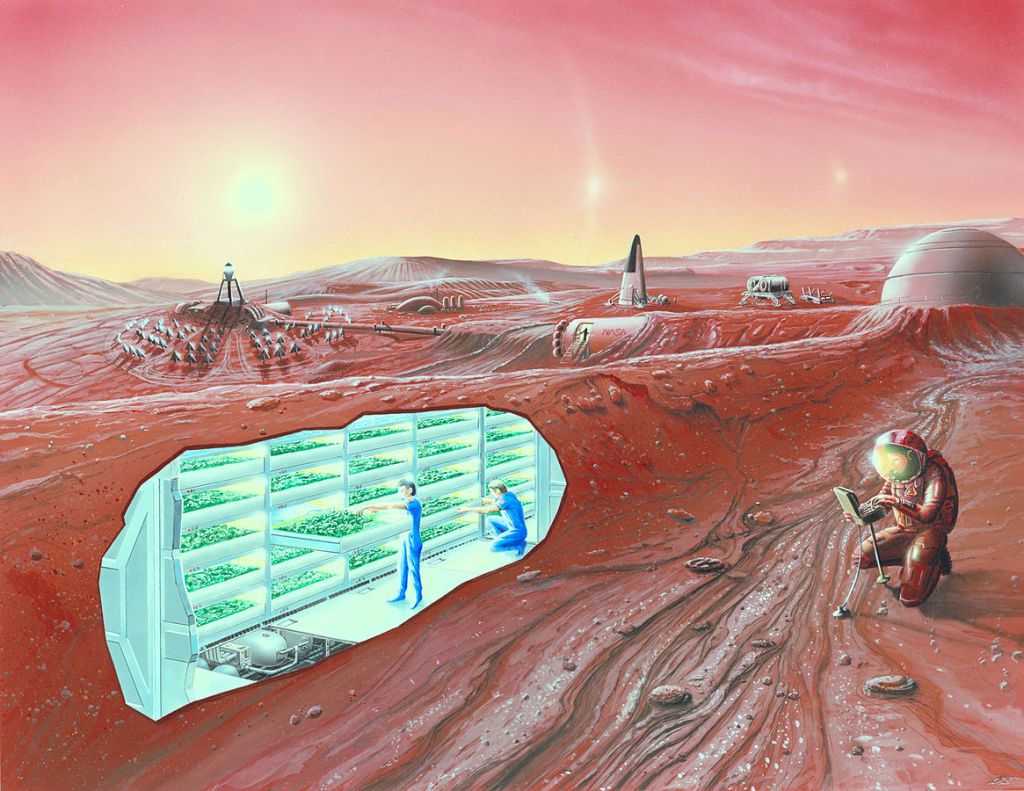

Martian Colonies

In a billion years or so, the sun will sterilize the planet as it turns into a red giant, eventually swallowing our planet whole in—according to one study—7.59 billion years. If we want to survive beyond this point, we will need to find a new planetary spaceship to call home. But even more immediately, evolutionary biology tells us that the more geographically spread out a species is, the greater its probability of survival. Elon Musk claims that “there is a strong humanitarian argument for making life multi-planetary…in order to safeguard the existence of humanity in the event that something catastrophic were to happen.” Similarly, Stephen Hawking—who recently booked a trip to space on Richard Branson’s Virgin Galactic spaceship—believes that humanity has about 100 years to colonize space or face extinction.

There are good reasons to believe that this will happen in the coming decades. Musk has stated that SpaceX will build a city on the fourth rock from the sun “in our lifetimes.” And NASA has announced that it “is developing the capabilities needed to send humans to an asteroid by 2025 and Mars in the 2030s.” NASA is even planning to “send a robotic mission to capture and redirect an asteroid to orbit the moon. Astronauts aboard the Orion spacecraft will explore the asteroid in the 2020s, returning to Earth with samples.”

Unprecedented Agricultural Production

According to a PEW study, the global population will reach approximately 9.3 billion by 2050. To put this in perspective, there were only 6 billion people alive in 2000, and roughly 200 million living when Jesus was (supposedly) born. This explosion has led to numerous Malthusian predictions of a civilizational collapse. Fortunately, the Green Revolution obviated such a disaster in the mid-twentieth century, although it also introduced new and significant environmental externalities that humanity has yet to overcome.

It appears that “in the next 50 years we will need to produce as much food as has been consumed over our entire human history,” to quote Megan Clark, who heads Australia’s Commonwealth Scientific and Industrial Research Organisation. She said this “means in the working life of my children, more grain than ever produced since the Egyptians, more fish than eaten to date, more milk than from all the cows that have ever been milked on every frosty morning humankind has ever known.” Although technology has enabled the world to effectively double its food output between 1960 and 2000, we face unprecedented challenges such as climate change and the Anthropocene extinction.

Kardashev Transition

Theoretical physicist Michio Kaku has claimed that human civilization could transition to a Type 1 civilization on the Kardashev scale within the next 100 years. A Type 1 civilization can harness virtually all of the energy available to its planet (including all the electromagnetic radiation sent from its sun), perhaps even controlling the weather, earthquakes, and volcanoes. The Oxford philosopher Nick Bostrom tacitly equates a Type 1 civilization with the posthuman condition of “technological maturity,” which he describes as “the attainment of capabilities affording a level of economic productivity and control over nature close to the maximum that could feasibly be achieved.”

“The danger period is now because we still have the savagery.”

Right now, human civilization would qualify as a Type 0, although emerging “world-engineering technologies” could change this in the coming decades, as they enable our species to manipulate and rearrange the physical world in increasingly significant ways. But Kaku worries that the transition from a Type 0 to a Type 1 civilization carries immense risks to our survival. As he puts it, “ the danger period is now because we still have the savagery. We still have all the passions. We have all the sectarian fundamentalist ideas circulating around. But we also have nuclear weapons. We have chemical, biological weapons capable of wiping out life on Earth.” In other words, as I have written, archaic beliefs about how the world ought to be are on a collision course with neoteric technologies that could turn the entire planet into one huge graveyard.

Indefinite Longevity

This is a primary goal of many transhumanists, who see aging as an ongoing horror show that kills some 55.3 million people each year. It is, transhumanists say, “deathist” to argue that halting senescence through technological interventions is wrong: dying from old age should be no more involuntary than dying from childhood leukemia.

The topic of anti-aging technology gained a great deal of attention the past few decades due to the work of Aubrey deGray, who cofounded the Peter Thiel-funded Methuselah Foundation. According to the Harvard geneticist George Church, scientists could effectively reverse aging within—wait for it— the next decade or so. This means actually making older people young again, not just stabilizing the healthy physiological state of people in their mid-20s. As Church puts it, the ultimate goal isn’t “about stalling or curing, it’s about reversing.” One possible way of achieving this end involves the new breakthrough gene-editing technology called CRISPR/Cas9, as Oliver Medvedik discusses in a 2016 TED talk.

Catastrophic Environmental Collapse

According to a 2012 article in Nature, we could be approaching a sudden, irreversible, catastrophic collapse of the global ecosystem that unfolds on timescales of a decade or so. It would usher in a new biospheric normal that could make the continued existence impossible. In fact, studies confirm that our industrial society has initiated only the sixth mass extinction event in the last 3.8 billion years, and other reports find that the global population of wild vertebrates has declined between 1970 and 2012 by a staggering 58 percent. Among the causes of this global disaster in slow motion is industrial pollution, ecosystem fragmentation, habitat destruction, overexploitation, overpopulation, and of course climate change.

Deforestation. Image: Dikshajhingan/Wikimedia

Yet another major study claims that there are nine “planetary boundaries” that demarcate a “safe operating space for humanity.” As the authors of this influential paper write, “anthropogenic pressures on the Earth System have reached a scale where abrupt global environmental change can no longer be excluded…Transgressing one or more planetary boundaries may be deleterious or even catastrophic” to our systems. Unfortunately, humanity has already crossed three of these do-not-cross boundaries, namely climate change, the rate of biodiversity loss (i.e., the sixth mass extinction), and the global nitrogen cycle. As Frederic Jameson has famously said, “it has become easier to imagine the end of the world than the end of capitalism”.

Nuclear Attack

The only time nuclear weapons were used in conflict occurred at the end of World War II, when the US dropped two atomic bombs on the unsuspecting folks of the Japanese archipelago. But there are strong reasons for believing that another bomb will be used in the coming years, decades, or century. First, consider that the US appears to have entered into a “new Cold War” with Russia, as the Russian Prime Minister Dmitry Medvedev puts it. Second, North Korea continues to both develop its nuclear capabilities and threaten to use nuclear weapons against its perceived enemies. Third, when Donald Trump was elected the US president, the venerable Bulletin of the Atomic Scientists moved the Doomsday Clock minute-hand forward by 30 seconds in part because of “disturbing comments about the use and proliferation of nuclear weapons” made by Donald Trump.

And fourth, terrorists are more eager than ever to acquire and detonate a nuclear weapon somewhere in the Western world. In a recent issue of their propaganda magazine, the Islamic State fantasized about acquiring a nuclear weapon from Pakistan and exploding it in a major urban center of North America. According to the Stanford cryptologist and founder of NuclearRisk.org, Martin Hellman, the probability of a nuclear bomb going off is roughly 1 percent every year from the present, meaning that “in 10 years the likelihood is almost 10 percent, and in 50 years 40 percent if there is no substantial change.” As the leading public intellectual Lawrence Krauss told me in a previous interview for Motherboard, unless humanity destroys every last nuclear weapon on the planet, the use of a nuclear weapon is more or less inevitable.

Machine Superintelligence

Homo sapiens are currently the most intelligent species on the planet, where “intelligence” is defined as the mental capacity to attain suitable ends to achieve one’s means. But this could change if scientists successfully create a machine-based general intelligence that exceeds human-level intelligence. As scholars for decades have observed, this would be the most significant event in human history, since it would entail that our collective fate would then depend more on the superintelligence than our own, just as the fate of the mountain gorilla now depends more on human actions than its own. Intelligence confers power, so a greater-than-human-level intelligence would have greater-than-human-level power over the future of our species, and the biosphere more generally.

This would be the most significant event in human history.

According to one survey, nearly every AI expert who was polled agrees that one or more machine superintelligences will join our species on planet Earth by the end of this century. Although the field of AI has a poor track record of seeing the future—just consider Marvin Minsky’s claim in 1967 that “Within a generation…the problem of creating artificial intelligence will substantially be solved”—recent breakthroughs in AI suggest that real progress is being made and that this progress could put us on a trajectory toward machine superintelligence.

Civilizational Collapse

In their 1955 manifesto, Bertrand Russell and Albert Einstein famously wrote:

Many warnings have been uttered by eminent men of science and by authorities in military strategy. None of them will say that the worst results are certain. What they do say is that these results are possible, and no one can be sure that they will not be realized…We have found that the men who know most are the most gloomy.

This more or less describes the situation with respect to existential risk scholars, where “existential risks” are worst-case scenarios that would, as two researchers put it, cause the permanent “loss of a large fraction of expected value.” Those who actually study these risks assign shockingly high probabilities to an existential catastrophe in the foreseeable future.

An informal 2008 survey of scholars at an Oxford University conference suggests a 19 percent chance of human extinction before 2100. And the world-renowned cosmologist Lord Martin Rees writes in his 2003 book Our Final Hour that civilization has a mere 50-50 chance of surviving the present century intact. Other scholars claim that humans will probably be extinct by 2110 (Frank Fenner) and that the likelihood of an existential catastrophe is at least 25 percent (Bostrom). Similarly, the Canadian biologist Neil Dawe suggests that he “wouldn’t be surprise if the generation after him witnesses the extinction of humanity.” Even Stephen Hawking seems to agree with these doomsday estimates, as suggested above, by arguing that humanity will go extinct unless we colonize space within the next 100 years.

So, the “promise and peril” cliche should weigh heavily on people’s minds—especially when they head to the voting booth. If humanity can get its act together, the future could be unprecedentedly good; but if tribalism, ignorance, and myopic thinking continue to dominate, the last generation may already have been born.

Phil Torres is the founding director of the X-Risks Institute . He has written about apocalyptic terrorism, emerging technologies, and global catastrophic risks. His forthcoming book is called Morality, Foresight, and Human Flourishing: An Introduction to Existential Risks .