This piece is part of an ongoing Motherboard series on Facebook’s content moderation strategies. You can read the rest of the coverage here.

On Friday, at least 49 people were killed in terror attacks against mosques in Christchurch, New Zealand. One apparent shooter broadcast the attack onto Facebook Live, the social network’s streaming service. The footage was graphic, and Facebook deleted the attackers’ Facebook and Instagram accounts, although archives of the video have spread across other online services.

Videos by VICE

The episode highlights the fraught difficulties in moderating live content, where an innocuous seeming video can quickly turn violent with little or no warning. Motherboard has obtained internal Facebook documents showing how the social media giant has developed tools to make this process somewhat easier for its tens of thousands of content moderators. Motherboard has also spoken to senior employees of Facebook as well as sources with direct knowledge of the company’s moderation strategy, who described how Live was, and sometimes still is, a difficult type of content to keep tabs on.

“I couldn’t imagine being the reviewer who had to witness that livestream in New Zealand,” a source with direct knowledge of Facebook’s content moderation strategies told Motherboard. Motherboard granted some sources in this story anonymity to discuss internal Facebook mechanisms and procedures.

Like any content on Facebook, be those posts, photos, or pre-recorded videos, users can report Live broadcasts that they believe contain violence, hate speech, harassment, or other terms of service violating behaviour. After this, content moderators will review the report, and make a decision on what to do with the Live stream.

According to an internal training document for Facebook content moderators obtained by Motherboard, moderators can ‘snooze’ a Facebook Live stream, meaning it will resurface to moderators again every 5 minutes, so they can check if anything has developed. Moderators also have the option to ignore it, essentially closing the report; delete the stream; and escalate the stream to a specialized team of reviewers to scrutinise if it contains a particular type of material such as terrorism. In the case of terrorism, escalation would flag the stream to Facebook’s Law Enforcement Response Team (LERT), who work directly with police. In the Christchurch case, Facebook told Motherboard it had been in contact with New Zealand law enforcement since the start of this unfolding incident.

Got a tip? You can contact this reporter securely on Signal on +44 20 8133 5190, OTR chat on jfcox@jabber.ccc.de, or email joseph.cox@vice.com.

When escalating a potential case of terrorism in a livestream, moderators are told to fill in a selection of questions about the offending content: what is happening that indicates the user is a committing an act of terrorism? When has the user said indicated that harm will occur? Who is being threatened? Does the user show weapons in the video? What are the users’ surroundings; are they driving a car?

On Friday, New Zealand Prime Minister Jacinda Ardern explicitly described the Christchurch mosque shootings as a terrorist attack.

Finally, moderators can also “mark” the stream with a variety of different labels, such as disturbing, sensitive, mature, or low quality, the training document obtained by Motherboard adds.

When reviewing streams, moderators may watch the live portion of the material itself as well as sections that already aired, and multiple moderators may work on the same clip, each rewinding to the point the previous reviewer stopped, the document indicates.

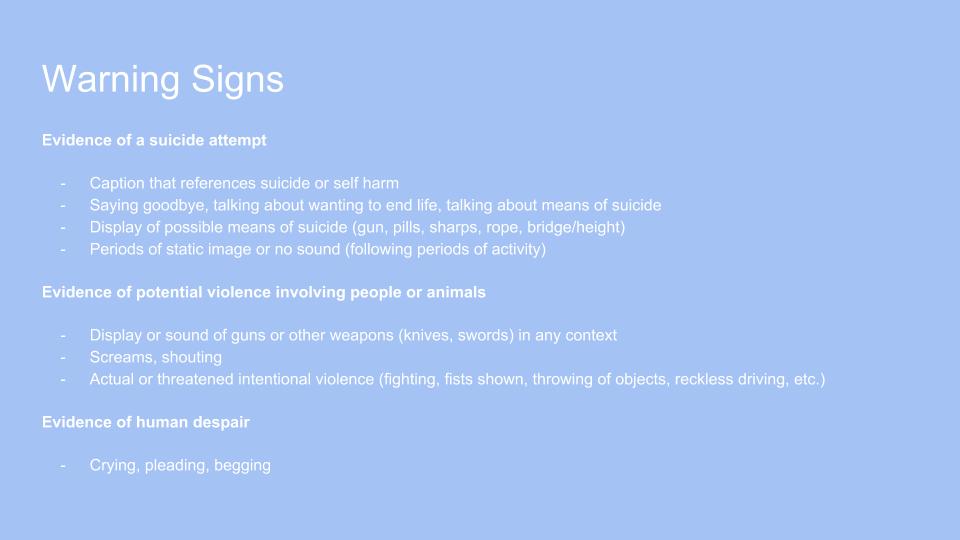

WARNING SIGNS

For Facebook Live video, moderators are told to watch out for “warning signs” which may show a stream is about to include violating content, the training document continues. These include evidence of a suicide attempt, with people saying goodbye, or talking about ending their life. The document also tells moderators to be on the lookout for “Evidence of human despair,” such as “Crying, pleading, begging.”

With relevance to the Christchurch stream and other terrorist attacks, some of those warning signs fall under “Evidence of potential violence involving people or animals.” Specifically, that includes the “Display or sound of guns or other weapons (knives, swords) in any context,” the document reads.

A second source with direct knowledge of Facebook’s moderation strategy told Motherboard “Live streams can be slightly more difficult in that you’re sometimes trying to monitor the live portion and review an earlier portion at the same time, which you can’t really do with sound.”

Of course, when it comes to weapons, and in particular guns, a stream’s transition from non-violating to violent can happen in milliseconds. In this respect, the fact that users are easily able to broadcast acts of violence via Facebook Live is not an indication that the social media company isn’t trying to find ways to moderate that content, but indicative of the difficulties that live broadcasts present to all platforms.

In a copy of the attack video viewed by Motherboard, the attacker shows several weapons right at the start of the stream, and they frequently reappear through the footage as the attacker drives to the mosque.

“I’m not sure how this video was able to stream for [17] minutes,” the second source with direct knowledge of Facebook’s content moderation strategies told Motherboard.

Because Live has had a rocky moderation history as Facebook learned to control it, the company has created tools to make moderators’ jobs somewhat easier.

“Facebook Live was something that ramped [up] quickly, that from a consumer experience was delivering great customer experiences. But there also was unearthing places we had to do better and were making mistakes, as violence and other policy violations were occurring,” James Mitchell, the leader of Facebook’s risk and response team, told Motherboard in late June last year in an interview at the company’s headquarters.

In the same set of interviews, Neil Potts, Facebook’s public policy director, told Motherboard that moderation issues “became really acute around the live products, Facebook Live, and suicides.”

“We saw just a rash of self-harm, self-injury videos, go online, and we really recognized that we didn’t have a responsive process in place that could handle those, the volume, now we’ve built some automated tools to help us better accomplish that,” he added.

Justin Osofsky, Facebook’s head of global operations, previously told Motherboard, “We then made a concerted effort to address it, and it got much better, by staffing more people, building more tools, evolving our policies.”

One tool moderators have access to is an interface that gives them an overview of a Facebook Live stream, including a string of thumbnails to get a better idea of how a stream has progressed; a graph showing the amount of user engagement at particular points, and the ability speed up the footage or slow it down, perhaps if they want to skip to a part with violating content or take a moment to scrutinise a particular section of the footage.

“You know if this video’s 10 minutes, at minute four and 12 seconds, all of a sudden people are reacting. Probably a pretty good sign to go and see what happened there.” Osofsky added.

“I couldn’t imagine being the reviewer who had to witness that livestream in New Zealand.”

Judging by a watermark in the corner of the stream, in this case, the attacker appears to have used an app called LIVE4, which streams footage from a GoPro camera to Facebook Live.

“We were shocked by the news like everyone else,” Alex Zhukov, CEO of LIVE4, told Motherboard in an email

“We are ready to work with law enforcement agencies to provide any information we have to help the investigation. And also Facebook representatives, to make sure the platform stays safe and is used only as it was intended. We will be blocking access to the app to anyone spreading evil. Unfortunately we [LIVE4] have no technical ability to block any streams while they are happening,” he added.

Mia Garlick from Facebook New Zealand told Motherboard in a statement, “Our hearts go out to the victims, their families and the community affected by this horrendous act. New Zealand Police alerted us to a video on Facebook shortly after the livestream commenced and we quickly removed both the shooter’s Facebook and Instagram accounts and the video. We’re also removing any praise or support for the crime and the shooter or shooters as soon as we’re aware. We will continue working directly with New Zealand Police as their response and investigation continues.”

Jason Koebler contributed reporting.

Subscribe to our new cybersecurity podcast, CYBER.