Computers are funny when it comes to time. They don’t really like to wait.

Assuming that no input-output operations are involved, a program can rip through a sequence of discrete instructions like a hot knife through butter. Add I/O, however, and things get weird. I/O generally happens at much slower timescales, which means that a programmer has to make the decision as to whether or not a program is just going to hang there and wait for the I/O operation to complete (which could be fetching some data from a hard-drive or keyboard input) or if it’s going to continue on and handle the completing I/O op somewhere further along in the program’s execution stream. In that latter case, more instructions will have completed between the time that the I/O op starts and when it completes. And from the programmer’s perspective, that’s a messy proposition. It’s often preferable and safer to just wait before computing anything else.

Videos by VICE

These two approaches are known as synchronous and asynchronous programming, respectively. They each make sense in scenarios of wildly different I/O event timescales. At nanosecond scales, or billionths of a second, it makes sense to just hang out and wait for the operation (such as a fetch from DRAM memory) to complete. At millisecond scales, or thousandths of a second, it makes more sense to be asynchronous. The computer has time to do other stuff, in other words.

New hardware and architectures are making things a lot messier. We now have microsecond scales to contend with. A microsecond is pretty much right in the middle, which means that it’s a lot harder to decide between synchronous and asynchronous approaches. This problem is described in a new paper in the Communications of the ACM by researchers at Google. “Efficient handling of microsecond-scale events is becoming paramount for a new breed of low-latency I/O devices ranging from datacenter networking to emerging memories,” Luiz Barroso and colleagues write.

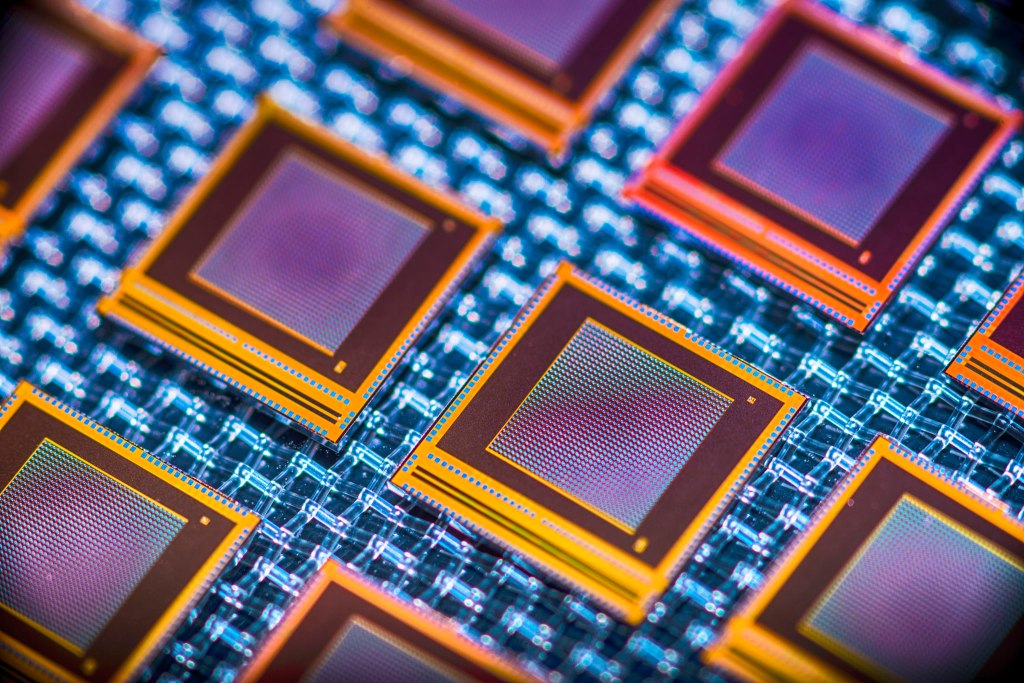

There’s a real risk here. We have microsecond technologies—fast datacenter networks, flash memory, GPU accelerators—but if we can only program them as millisecond technologies, what’s the use?

The Google paper doesn’t offer any silver bullets. Better hardware optimization will help, as will new forms of “lightweight” asynchronous programming where program execution can switch to something else, but only a much, much smaller something else than that allowed by much longer millisecond latencies.

Still, the paper concludes hopefully: “Such optimized designs at the microsecond scale, and corresponding faster I/O, can in turn enable a virtuous cycle of new applications and programming models that leverage low-latency communication, dramatically increasing the effective computing capabilities of warehouse-scale computers.”